How spoken media becomes the world’s biggest focus group

For decades, brands trying to understand their customers have faced the same frustrating trade-off. If you want real depth, you run interviews. They’re valuable, but slow, expensive, and limited to a handful of people who know they’re being studied. Insightful, yes, but impossible to scale.

The alternative has always been social media. You get volume and speed, but at the cost of authenticity. Typed text is curated, flattened, and stripped of tone. People type differently than they speak, so the emotion, nuance, and context that actually explain why people think what they think never shows up.

This leaves brands stuck choosing between:

Depth without scale, or

Scale without truth

Spoken media finally changes that.

On TikTok, YouTube, and podcasts, people aren’t typing, they’re talking. They explain decisions, compare products, express frustration, excitement, curiosity, or concern. They reveal what they love, what confuses them, and what gets in their way. And they do it at a scale interviews could never reach.

The challenge has always been measurement. Spoken media used to be “dark”, hard to capture, even harder to structure, and impossible to analyze at scale. The world was talking, but brands had no way to listen.

All Ears + KwantumLabs: Turning voice into structured intelligence

All Ears makes spoken media measurable, capturing and indexing millions of voice-based conversations from the platforms where today’s narratives actually form. KwantumLabs brings the analytical engine, deterministic taxonomies, emotional modeling, and structured brand tracking, to transform that raw conversation into clean, decision-ready insight.

Together, we make it possible to see:

Not just what people say, but how they say it

Not just keywords, but the narratives shaping your category

Not just sentiment, but emotion, friction, trust, and intensity

Not just mentions, but the associations and patterns that signal real brand strength

What excites me about long‑form podcasts and spoken word is that they finally let us study emotional sentiment at scale without having to steer people with survey questions. When people talk for 30 or 60 minutes about AI tools, you hear how brands actually fit into their lives, and how they say it —where they delight, where they frustrate, and why. That kind of unprompted, emotionally rich signal is a hugely promising complement to traditional research and short‑form social text, especially when you care about the emotions that drive brand preference.”

– Rogier Verhulst, Co-Founder, KwantumLabs.ai

The result is something entirely new: an always-on, interview-level understanding built from real, organic conversations, not staged prompts or shallow comment fields.

We deliver this however you need it:

Monthly intelligence reports, curated data streams, or direct API access into a sector-specific knowledge graph that organizes brands, topics, emotions, and narratives in your category.

This knowledge graph becomes your living intelligence layer, a dynamic, structured map of your entire market powered by spoken media, ready to plug into dashboards, workflows, and AI systems.

When you combine the scale of social conversation with the authenticity of spoken expression, you unlock a new kind of truth, one that reveals not only what your audience thinks, but why.

A look inside: What spoken media can reveal in a category

To illustrate the power of this approach, here’s a real example from the AI space, where we analyzed how people feel when they talk about major AI tools.

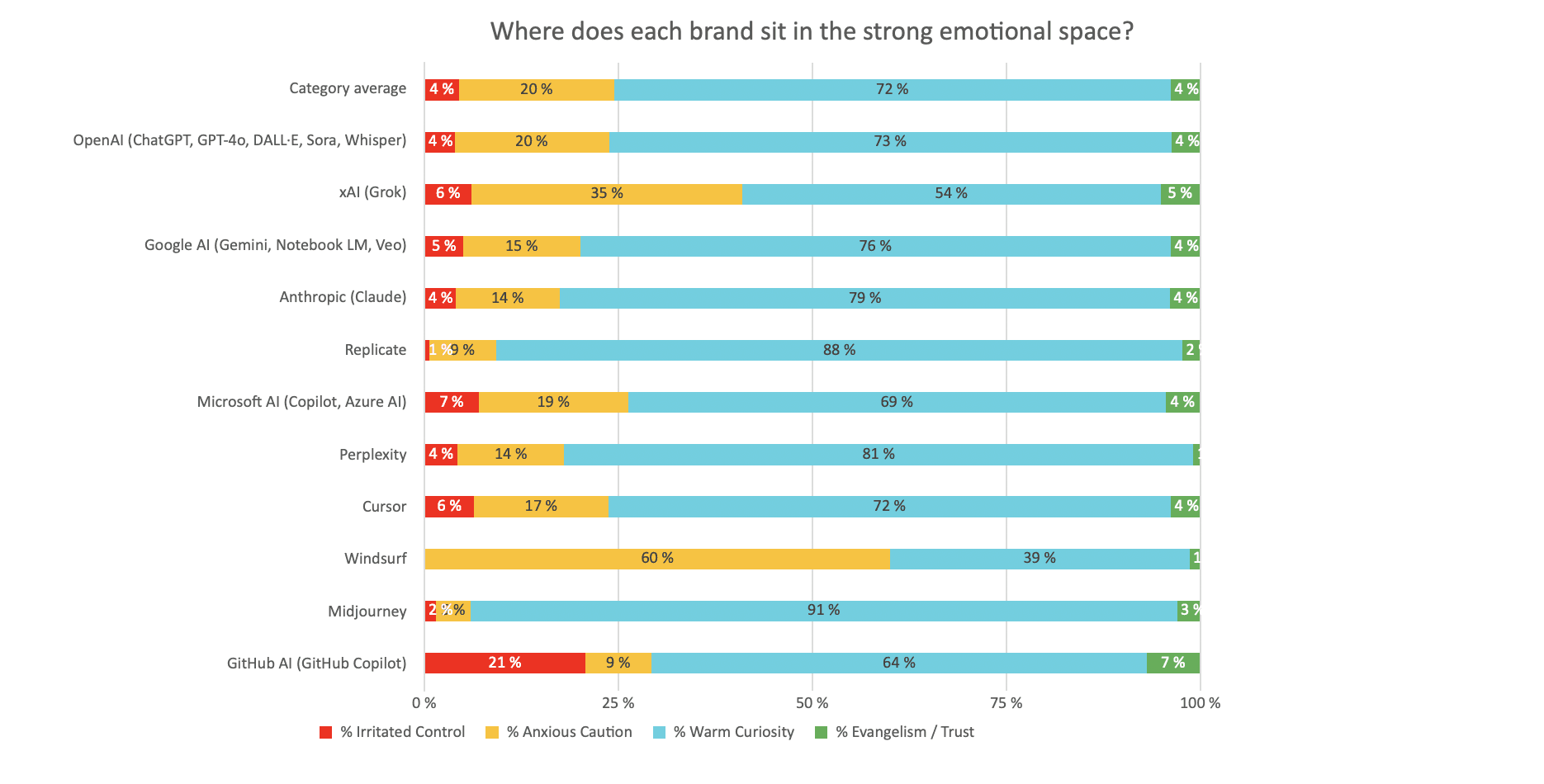

Instead of reducing emotion to “positive vs. negative,” spoken conversation lets us map brands across four intuitive emotional territories:

Warm Curiosity – positive exploration

Evangelism / Trust – deep confidence and reliance

Irritated Control – in-control frustration

Anxious Caution – low-control concern

These aren’t abstract labels, they point to what’s driving or blocking brand preference. Brands that sit in “Evangelism/Trust” tend to earn repeat use and advocacy, while those trending toward “Irritated Control” surface clear friction points that can be fixed. Similarly, brands stuck in “Warm Curiosity” may have awareness but lack the emotional momentum that creates long-term loyalty.

(Example: category-wide emotional footprint across strong mentions)

This chart shows how spoken conversations break down across the four emotional quadrants. It highlights, for example, that many AI brands live largely in Warm Curiosity, while a few show meaningful spikes in Evangelism or Irritated Control, signals of higher stakes and deeper user engagement. For example: OpenAI sits mostly in positive, low-friction emotions like Warm Curiosity contrasted by xAI/ Grok, that leans sharply toward negative, high-intensity reactions. In the AI landscape, emotional intensity matters more than awareness.

Brands with higher concentrations of high-agency emotions, whether positive or negative, have greater potential to shape user behavior and capture long-term value. The challenge is steering that intensity toward evangelism while minimizing the friction that breeds irritation.

It’s a quick way to see:

Which brands inspire trust

Which spark frustration

Which remain in early-stage exploration

And how emotional patterns differ across a category

For brands, these patterns translate directly into actions:

If trust is low → amplify use cases that communicate reliability and value

If frustration is high → identify and resolve the moments that trigger friction

If curiosity dominates → sharpen positioning to convert exploration into reliance

If caution rises → address concerns through education, clarity, and reassurance

Want to see what spoken media reveals in your category?

Together with KwantumLabs, we’re offering a discounted “category pulse” until December 15: a focused view of spoken conversations across TikTok, YouTube, and podcasts, plus an emotional snapshot of where brands land in the four core emotional territories of your category.